Summary

We are going to implement the iterated 2-D convex hull algorithm in parallel under the shared address space model using OpenMP. We will compare this to an efficient sequential convex hull algorithm like Graham scan and analyze the relative performance benefits on both the GHC and PSC machines.

Background

Finding the convex hull of a set of points is a classical problem in computer science with numerous practical applications. These include maximization problems in economics, 3-D modeling in graphics, and notably computing the Voronoi diagram and Delaunay triangulation of 2-D points, which itself has dozens of additional applications.

There are many known algorithms for efficiently computing the convex hull, though most of them are inherently sequential in nature. However, a relatively recent publication by CMU professor Guy Blelloch found that there is potential to parallelize the randomized incremental approach to computing the convex hull. As far as we know, however, there has been no attempt to implement the algorithm(s) described in the paper efficiently on real machines. As such, that is what we decided to tackle with this project.

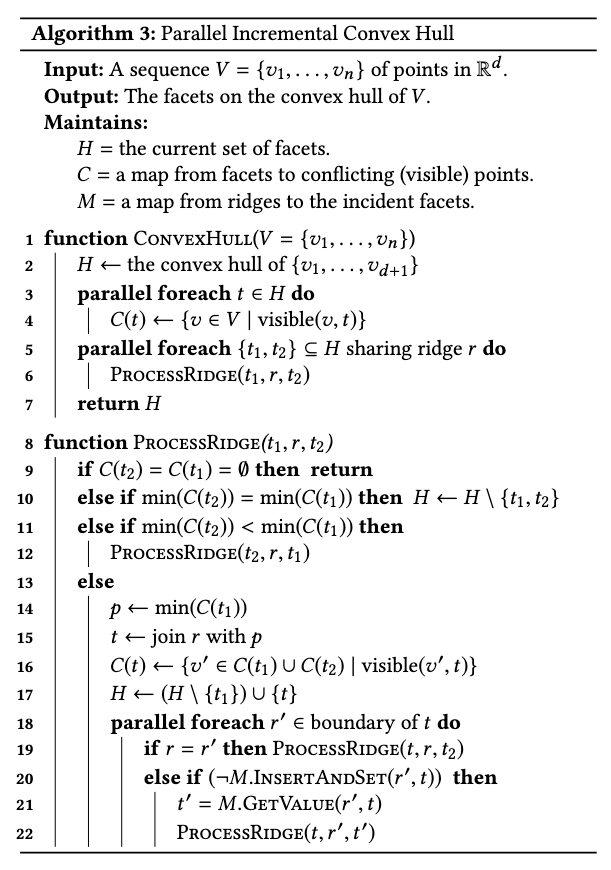

An outline of the pseudocode for the parallel randomized incremental convex hull can be seen above. Here, we can see that the potential for parallelism is denoted using the parallel foreach keyword. However, translating this into real code is not as straightforward as it may seem. First of all, it can be seen that there is contention for the \( C \) and \( H \) data structures, so we will have to implement these on top of a layer of synchronization. The order of execution is also not explicit and potentially a huge factor for optimization, since we'd ideally like to execute the parallel operations layer-by-layer in the dependency graph. These will all be interesting considerations we have to deal with when implementing the algorithm on a physical machine and maximizing the speedup we observe.

Challenges

As mentioned above, there are two key challenges to implementing this in parallel. Firstly the algorithm relies on several data structures that every thread contends for: namely, the array \( C \) as well as the maps \( H \) and \( M \). Ensuring synchronization for all of these will be challenging to do efficiently. While we could just use a single global lock (or equivalently OpenMP's critical pragma), this could be a source of major slowdowns in our code. Because of this, we anticipate needing much more sophisticated synchronization strategies in order to maximize performance. Figuring these strategies out will require careful analysis of the access patterns, as well as decisions about how to most efficiently construct the data structures to support the needed operations efficiently in parallel. While this isn't a problem for \( C \), for the pair of maps coming up with an efficient parallel implementation may be challenging.

Another challenge will be figuring out the optimal order of execution and how to maximally parallelize those operations. There exist many independent trees of dependencies, so figuring out how to most efficiently schedule these across our processors will be key to achieving a good parallel speedup. There is also a high degree of recursion in this code. Determining the optimal scheduling policy for the layers of recursive parallel for loops is a non-trivial challenge with no obvious solution, one that we'll need to do analysis of our code and benchmarking of various options in order to solve.

If we have extra time and end up trying to implement a CUDA version of the code, we will run into more fundamental problems. The memory access patterns of this algorithm are not amenable to coalescing, and there is a high degree of branching due to the various layers of recursive calls. All of these features make achieving a good speedup on CUDA very difficult, and a potentially very interesting challenge.

Resources

The primary resource that we need is access to PSC in order to benchmark our implementation on higher thread counts to check scaling. For smaller thread counts, we can simply use the GHC machines, which we have access to by default. If we are able to finish ahead of schedule and implement a CUDA version of the algorithm, we'll need GPU access, which we can also get on the GHC & PSC machines. Other than that, we don't need any other significant resources.

Goals & Deliverables

We plan to achieve all of the following. First, we will implement an efficient sequential algorithm for convex hull such as the Graham scan, which will serve as a baseline benchmark for how much faster our parallel algorithm can get relative to the fastest sequential algorithm. Then, we will implement the algorithm from the paper as closely as possible to how it is described and see how this performs on 1 thread compared to the efficient sequential algorithm. After this, we will parallelize the algorithm using OpenMP to be more scalable across multiple processors. The next step will be to profile and analyze both the sequential and parallel convex hull algorithms. We'll investigate and plot the performance on GHC and PSC with varying thread counts to measure the scalability of the code. We can also test the sensitivity to the problem size by testing with numerous different point counts. Finally, it will also be interesting to see the scalability of the algorithm on different types of inputs, in particular those where there are many points on the convex hull.

As for things we hope to achieve, something that would be nice would be improving the parallelism using other parallel programming models, such as CUDA and MPI. An even more ambitious stretch goal, if we had sufficient extra time, would be to extend our implementation to work for 3-D convex hulls as well.

Platform Choice

For this project, we will be implementing all of our algorithms in C++ due to its fast execution times and ergonomic support for OpenMP via pragmas. We chose the shared address space model with OpenMP because the data structures that get concurrently accessed in the pseudocode of the algorithm seem to be most directly implemented under the same address space. We decided to profile the code with GHC and PSC since these are the most easily accessible platforms to us and they should suffice in power to demonstrate how well our algorithms scale with large core counts (up to 128 for PSC).

Schedule

Week 1 – 03/24 - 03/30

For Week 1, we aim to understand the full parallel algorithm as well as implement the basic sequential algorithm as a baseline. We'll also write a test harness, consisting of a test-case generator to go along with the sequential solution that, in addition to acting as a performance baseline, will also act as a reference solution for testing purposes.

Week 2 – 03/31 - 04/06

During Week 2, we hope to implement the parallel algorithm on one processor in order to get a baseline performance measurement of how work-efficient this algorithm is. We don't foresee getting much more done this week, due to the exam and Carnival.

Week 3 – 04/07 - 04/13

In Week 3, our goal is to implement parallelism into our solution using OpenMP. This shouldn't require too many modifications beyond the adding of various pragmas, but the initial solution may rely heavily on atomic/critical pragmas that add a high degree of overhead.

Week 4 – 04/14 - 04/20

By the start of Week 4, we'd like to have a full parallel implementation working. Week 4 will be spent on benchmarking and optimizing this implementation in order to achieve a better speedup, especially at high thread counts. If we are able to do so faster than expected, we'll likely move on to attempting either an OpenMPI or CUDA implementation of this algorithm, but this is a stretch goal.

Week 5 – 04/21 - 04/27

Most of our time in Week 5 will be spent preparing our poster and wrapping up, including generating final benchmarks. If we were able to attempt a stretch goal, we may spend some time this week finishing the implementation of that as well.